“We have created a civilization with Stone Age emotions, medieval institutions, and godlike technology.”

– Edward O. Wilson, The Social Conquest Of Earth.

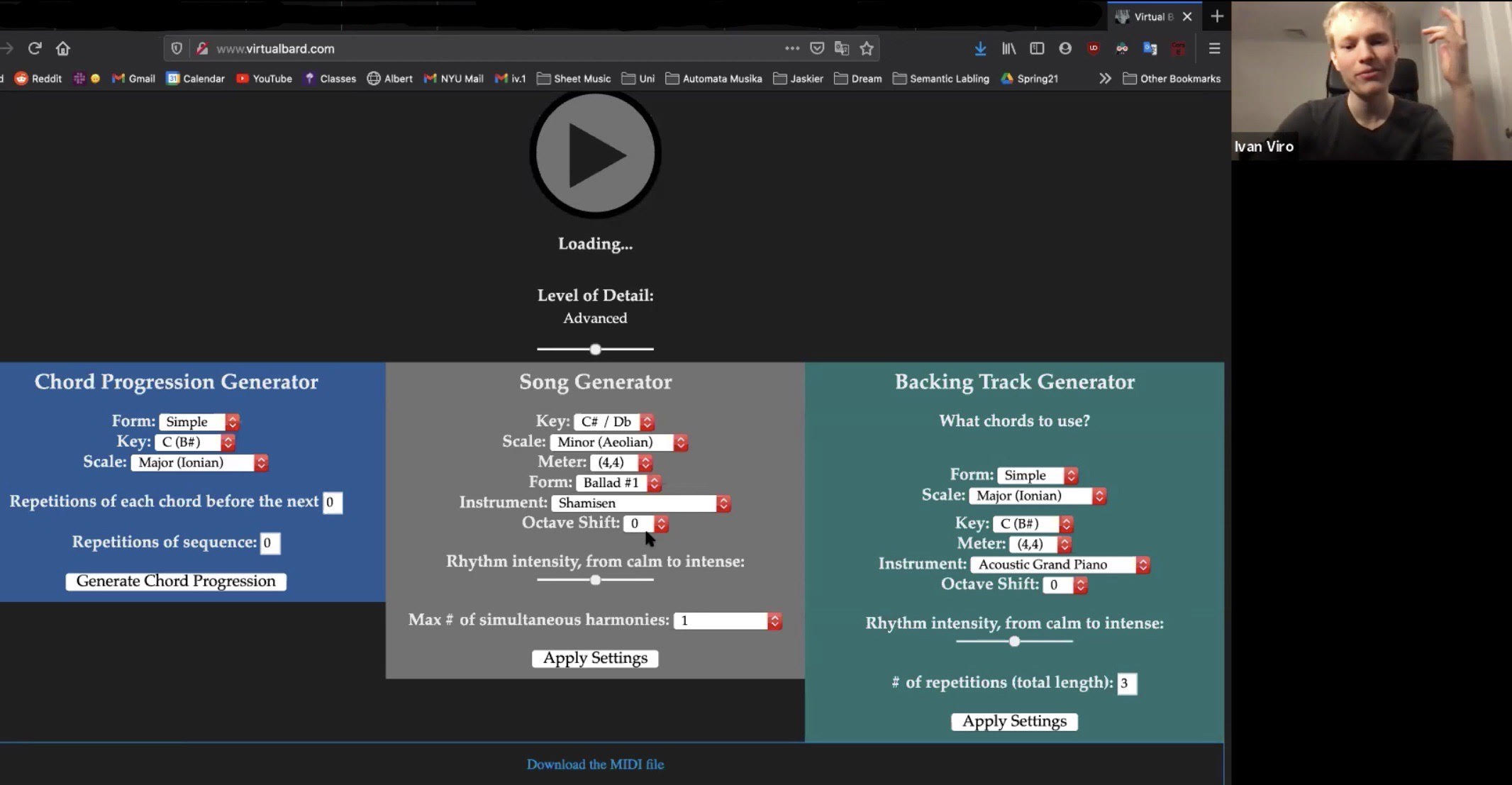

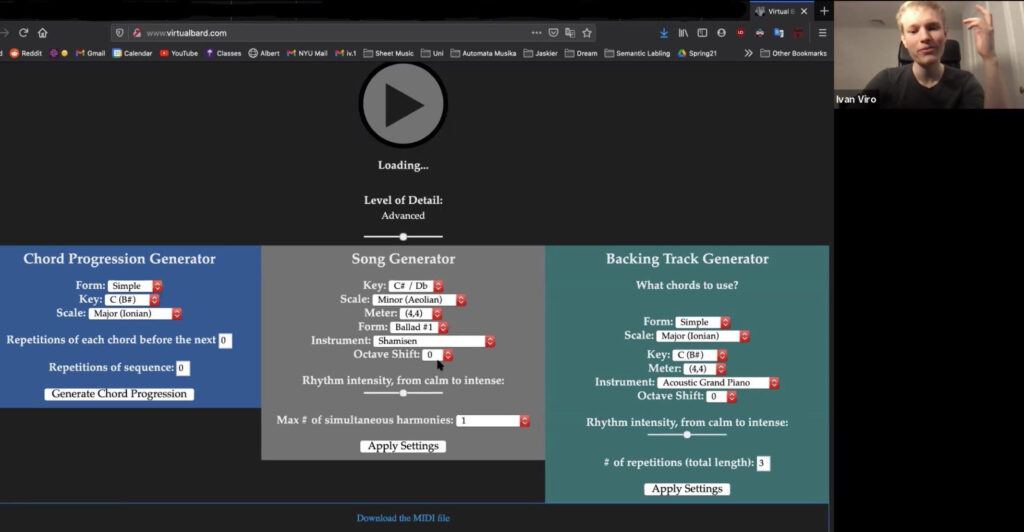

Ron White, author of How Computers Work, compares their history to mutation and natural selection that carry organic species to new shores. Each step in their evolutionary maze is the innovators’ response to conditions at that place and time, resembling the human-bound timeline’s adaptations. No one can tell when machines will obtain the problem-solving abilities that define us as humans, but as Max Tegmark puts it in his book Life 3.0, “many AI researchers had recently experienced a ‘holy s**t!’ moment, when they witnessed AI doing something they weren’t expecting to see for many years.” For me it came when my friend Ivan Viro, an NYU undergraduate computer science student, used his spare time during the lockdown to write a music composing program.

“Besides its obvious emotional and psychological dimensions, music is also a rational system that obeys complex and relatively rigorous construction laws,” writes Patrick Saint Dizier, CNRS research director, in his article Music And Artificial Intelligence, “Aristotle argued that music is numbers made audible.” Indeed, people were trying to set up distinct mappings between music and human feelings since the 16th century. “C minor: obscure and sad. D major: joyous and very warlike,” opens the key or mode descriptions from Charpentier’s Regles de Composition, 1682. The “Theory Of Musical Equilibration” – its roots being in the music-psychology teachings of Ernst Kurth, early 20th century – compares the effect of musical suspension (changes in a listener’s state of mind) to physical movement. “When a major tonic sounds, we feel only a very mild desire for something indefinable and substantial to change. From an emotional perspective, we can describe this sense of will as identifying with a sense of contentment with the here and now. When we hear an augmented chord, we cannot clearly identify with a will that things not change: listeners are left questioning. By a similar token, the character of the whole-tone scale corresponds to the mental image of floating weightlessly without a deliberate focus” (Theoretical Observations – the Theory of Musical Equilibrium, Daniela and Bernd Willimek).

“The most time-consuming part is translating emotional characteristics into musical terms,” says Ivan describing the coding process. “A head-on approach results in any positive sentiment becoming a quick tune in the major key. On the other hand, too much overlap in definitions of sounds for matching moods, such as love and anxiousness, offsets other parameters. It takes a lot of fine-tuning and experimentation.”

This manual outlining of musical structures depicting different narratives is the main difference between the two general types of music generating systems. “Pieces generated by machine-learned models will have features distributed in ways similar to the original corpus,” (A Functional Taxonomy Of Music Generation Systems, Herremans, Chuan & Chew 2017) meaning that those types of programs find prominent features of the style of music in interest and incorporate them in the output. The obvious challenge with these algorithms is finding the balance between similarity and novelty or creativity. Ivan’s program relies on input from a user – the setting of desired rhythm, melody, harmony, etc. – rather than on the availability of data to base the product on.

“One aspect is that it’s scalable,” says Ivan when asked about the advantages and limitations of his approach, “one can create a whole opera, just because the user is in control of the motifs. Think of The Lord Of The Rings soundtrack, each character there has a motif. The hobbits’ theme is recognizable throughout the film. Machine learning would have great difficulty generating cohesive long-term structures. The motif would sound believable for half a minute, but afterward it starts to stumble. In terms of limitations, my biggest fear is what happened to David Cope. He is one of the pioneers in algorithmic compositions and he created a program, ‘Emily Howell,’ that was so manually operated that it took weeks to work with it. He ended up not showing it to many people. And that’s the main difficulty – to make the computer determine the needed style on its own. It’s a question that wasn’t solved yet in the music theory itself, what determines style?”

The reason why music theory is a recent science, originating mostly in the early twentieth century, is that previously music was played differently from what we see in the remaining writings. A 1610 manuscript from London, A Varietie Of Lute Lessons, instructs straining the top string of a lute to maximum tension and then tuning the rest of the strings in relation to it. It means that two lute players could not perform together as there would be dissonance between their instruments. This is one of the reasons why orchestras did not appear before it became standard to tune instruments to a specific tone, which happened relatively late. Furthermore, musical styles were progressing at an increasingly fast rate until about the early twentieth century. The high complexity of compositions, such as Brahms’ works, made them almost impossible to describe. As a result, music evolved quicker than the academic interest in it.

“My theory is that musical style has parameters similar to linguistic structures. Syntax, morphemes, etc.,” says Ivan, “To be able to describe differences between composers and their emotional equivalents, one needs to determine those elements. Eventually, programs will be able to create music from other mediums. For example, you upload a screenplay and within seconds receive the soundtrack for your movie.”

With the increasing demand for musical background in video games and other media, the composition will become more automated. However, overcoming the current obstacles in the field, such as generating music with long-term structure and capturing higher-level content such as emotion and tension, would require more evaluation of music as a system.